Scalability has always been one of the unavoidable topics for Ethereum. For Ethereum to truly become a "world computer," it needs to possess scalability, security, and decentralization simultaneously. However, having all three conditions at the same time is known in the industry as the "Blockchain Trilemma," which has remained a significant unsolved problem throughout the industry.

However, with the proposal of a new sharding scheme called Danksharding by Ethereum researcher and developer Dankrad Feist at the end of 2021, it seems to bring a transformative solution to the "Blockchain Trilemma," potentially rewriting the rules of the game for the entire industry.

This research report will attempt to explain in layman's terms what Ethereum's new sharding scheme Danksharding is and its background. The reason for writing this report is that there are very few Chinese articles about Danksharding, and most of them require a high level of knowledge. Therefore, we will try to break down the complex principles behind it, using simple language so that even a beginner in Web3 can understand Ethereum's new sharding scheme Danksharding and its precursor EIP-4844.

Author: Spinach Spinach

Word count: This report exceeds 10,000 words, with an estimated reading time of 21 minutes.

Publishing platform:

https://research.web3caff.com/zh/archives/6259

Table of Contents#

-

Why does Ethereum need scalability?

-

Background of Ethereum's scalability

-

What is the Blockchain Trilemma?

-

What are the current scalability solutions for Ethereum?

-

-

Ethereum's initial sharding scheme Sharding 1.0

-

How does Ethereum's POS consensus mechanism work?

-

What is the initial sharding scheme Sharding 1.0?

-

What are the drawbacks of the initial sharding scheme Sharding 1.0?

-

-

What is Ethereum's new sharding scheme Danksharding?

-

Precursor scheme EIP-4844: Proto-Danksharding—New transaction type Blob

-

Danksharding—Complete scalability solution

-

Data Availability Sampling

-

Erasure Coding

-

KZG Commitment

-

Proposer/Builder Separation

-

Anti-censorship list (crList)

-

Two-slot PBS

-

-

-

Summary

-

References

Why does Ethereum need scalability?#

After Ethereum founder Vitalik Buterin published the Ethereum white paper "Next Generation Smart Contract and Decentralized Application Platform" in 2014, the blockchain entered a new era. The birth of smart contracts allowed people to create decentralized applications (DApps) on Ethereum, bringing a series of innovations to the blockchain ecosystem, such as NFTs, DeFi, GameFi, etc.

Background of Ethereum's scalability#

As the Ethereum ecosystem on-chain continues to grow, more and more people are starting to use Ethereum, and the performance issues of Ethereum are beginning to surface. When many people interact on Ethereum simultaneously, the blockchain experiences "congestion." Just like a road with fixed traffic light timings, a certain number of vehicles won't cause a traffic jam, but suddenly, during peak hours, when many cars enter the road, the number of vehicles leaving the road during the green light is far less than the number waiting at the traffic lights, leading to severe congestion. The time for all vehicles to pass through this road will be extended. The same applies to the blockchain; the confirmation time for everyone's interaction requests will also be prolonged.

However, in the blockchain, it's not just time that is prolonged; it also leads to high Gas fees (Gas fees can be understood as the payment to miners for their hard work, as miners are responsible for packaging and processing all transactions on the blockchain), because miners prioritize processing the highest-bidding transactions, which causes everyone to raise Gas fees to compete for faster interaction request confirmations, triggering a "Gas War." A well-known incident was in 2017 when an NFT project—CryptoKitties—became popular, pushing Gas fees to hundreds of dollars per interaction, making it extremely costly to perform a single interaction on Ethereum, consuming dozens or even hundreds of dollars in Gas fees.

The main reason for such high Gas fees is that Ethereum's performance can no longer meet the interaction demands of existing users. In terms of performance calculation, Ethereum is different from Bitcoin. Bitcoin only processes transfer information as a simple ledger, so its TPS is fixed at 7 transactions per second, but Ethereum is different.

Due to the existence of smart contracts, the content of each transaction on Ethereum varies, so the number of transactions (TPS) that each block can process depends on the size of the data contained in the transactions within a block. The size of each transaction's data is determined by real-time demand. Regarding Ethereum's performance mechanism, we can understand the following (this information is helpful for understanding Danksharding, so be sure to read it~):

-

Ethereum sets a data size limit for a block based on Gas fees, with a maximum of 30 million GAS of data per block.

-

Ethereum does not want each block's data size to be too large, so each block has a Gas Target of 15 million Gas.

-

Ethereum has a set of Gas consumption standards; different types of data consume different amounts of Gas. However, according to Spinach's estimates, each block is approximately 5kb to 160kb in size, with an average block size of around 60 to 70kb.

-

Once a block's Gas consumption exceeds the Gas Target of 15 million Gas, the base fee for the next block will increase by 12.5%. If it is below, the base fee will decrease. This mechanism is an automated dynamic adjustment mechanism that can continuously increase costs during peak transaction times to alleviate congestion while lowering costs during low transaction times to attract more transactions.

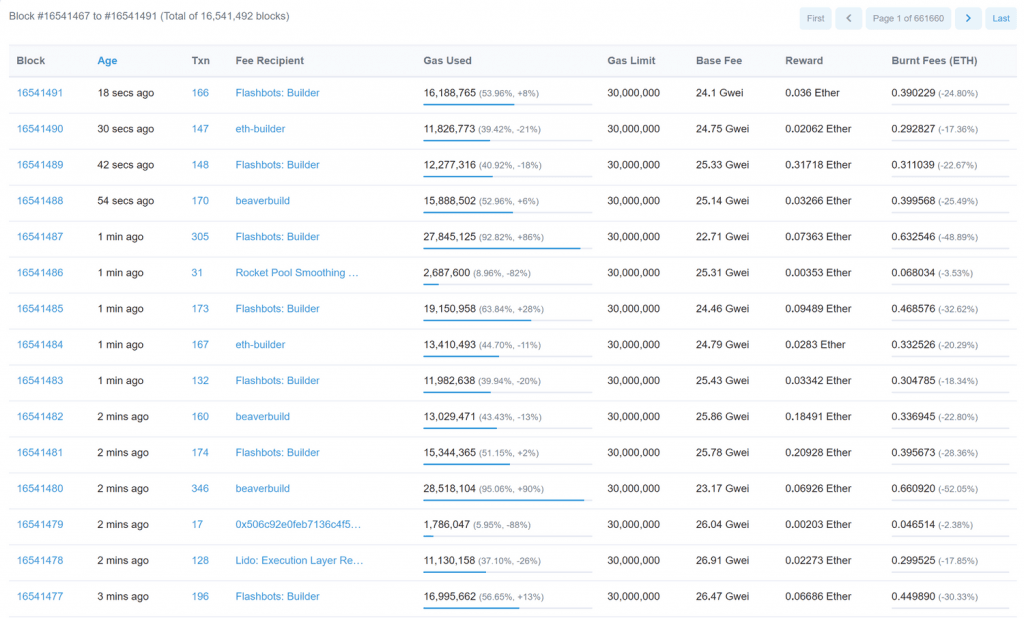

From the above mechanisms, we can conclude that Ethereum's TPS is variable. We can use a blockchain explorer to see the number of transactions in each block to estimate the TPS. According to the chart below, an average block, when just reaching the Gas Target, has about 160 transactions, with the highest reaching over 300 transactions. With a block time of 12 seconds, the TPS is approximately 13 to 30 transactions, but currently, it is known that Ethereum's TPS can reach up to 45 transactions per second.

Image source: Mainnet | Beacon Chain Explorer (Phase 0) for Ethereum 2.0 – BeaconScan

If we compare it to the world-renowned transaction system VISA, which can process tens of thousands of transactions per second, Ethereum's performance of processing a maximum of 45 transactions per second is indeed too weak to become a "world computer." Therefore, Ethereum urgently needs scalability to solve performance issues, which is crucial for its future. However, scalability is not an easy task, as there exists an "impossible triangle" in the blockchain industry.

What is the Blockchain Trilemma?#

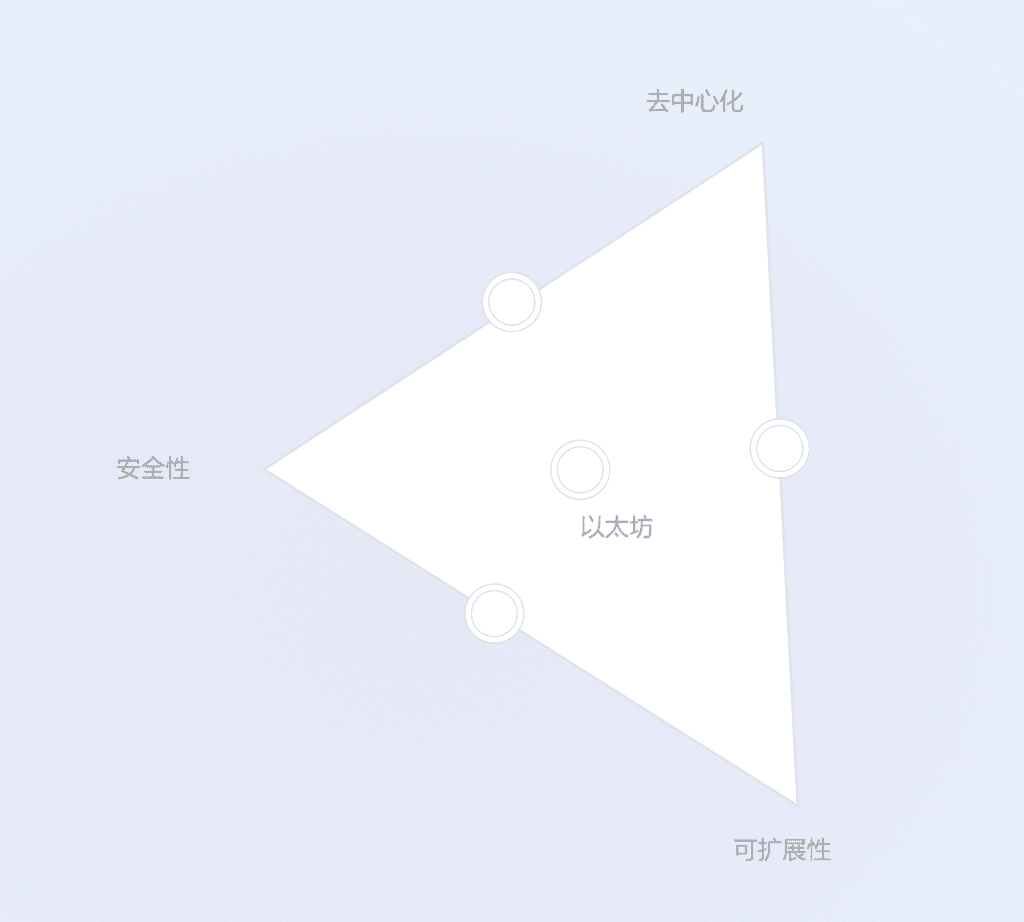

The "Blockchain Trilemma" refers to the fact that a public blockchain cannot simultaneously satisfy three characteristics: decentralization, security, and scalability.

-

Decentralization: Refers to the degree of decentralization of nodes; the more nodes there are, the more decentralized it is.

-

Security: Refers to the overall security of the blockchain network; the higher the cost of attack, the more secure it is.

-

Scalability: Refers to the blockchain's transaction processing performance; the more transactions it can process per second, the more scalable it is.

If we look at the importance of these three points, we find that decentralization and security carry the highest weight. Decentralization is the cornerstone of Ethereum; it is precisely decentralization that endows Ethereum with neutrality, censorship resistance, openness, data ownership, and nearly unbreakable security. The importance of security is self-evident, but Ethereum's vision is to achieve scalability while ensuring decentralization and security, which is a challenging task, hence it is referred to as the "Blockchain Trilemma."

Image source: Ethereum Vision | ethereum.org

What are the current scalability solutions for Ethereum?#

We know that to achieve scalability, Ethereum must ensure decentralization and security. To ensure decentralization and security, it must not overly increase the requirements on nodes during the scalability process. Nodes are essential roles in maintaining the entire Ethereum network; high requirements for nodes will hinder more people from becoming nodes, leading to increased centralization. Therefore, the lower the threshold for nodes, the better; low-threshold nodes will allow more people to participate, making Ethereum more decentralized and secure.

Currently, there are two scalability solutions for Ethereum: Layer 2 and sharding. Layer 2 is an off-chain solution for scaling the underlying blockchain (Layer 1), which works by executing requests off-chain. There are several types of Layer 2 solutions, but this report will focus on one Layer 2 solution: Rollup. The principle of Rollup is to package hundreds of transactions off-chain and send them to Ethereum as a single transaction, allowing everyone to share the cost of uploading to Ethereum, making it much cheaper while inheriting Ethereum's security.

Rollup currently has two types: Optimism Rollup and ZK Rollup. The difference between these two Rollups can be simply stated: Optimism Rollup assumes that all transactions are honest and trustworthy, compressing many transactions into one transaction submitted to Ethereum. After submission, there is a time window (challenge period—currently one week) during which anyone can challenge to verify the authenticity of the transaction. However, if a user wants to transfer ETH from the OP Rollup back to Ethereum, they must wait until the challenge period ends to receive final confirmation.

ZK Rollup, on the other hand, proves that all transactions are valid by generating a zero-knowledge proof and uploading the final state changes after executing all transactions to Ethereum. Compared to Optimism Rollup, ZK Rollup has more potential; it does not need to upload all the details of the compressed transactions like Optimism Rollup, only a zero-knowledge proof and the final state change data, meaning it can compress more data in terms of scalability than OP Rollup and does not require a long challenge period like OP Rollup. However, the biggest drawback of ZK Rollup is its high development difficulty, so in the short term, Optimism Rollup will occupy a significant portion of the L2 market.

In addition to Layer 2, another scalability solution is our main topic, sharding. We know that Layer 2 processes transactions off-chain. However, regardless of how Layer 2 processes data, Ethereum's inherent performance remains unchanged, so the scalability effect that Layer 2 can achieve is not that significant.

Sharding aims to achieve scalability at the Layer 1 level of Ethereum, but we know that achieving scalability on Ethereum requires ensuring its decentralization and security, so we cannot increase the burden on nodes too much.

The specific implementation of sharding has been a topic of ongoing discussion within the Ethereum community. The latest proposal is the subject of this article, Danksharding. Before discussing Danksharding, Spinach will briefly introduce what the old sharding scheme looked like and why it was not adopted.

Ethereum's initial sharding scheme Sharding 1.0#

Before discussing the Sharding 1.0 scheme, Spinach needs to introduce how Ethereum's current POS consensus mechanism operates, as this is essential knowledge for understanding both the Sharding 1.0 scheme and Danksharding. The explanation of the Sharding 1.0 scheme will be brief (just knowing what it aims to achieve is sufficient).

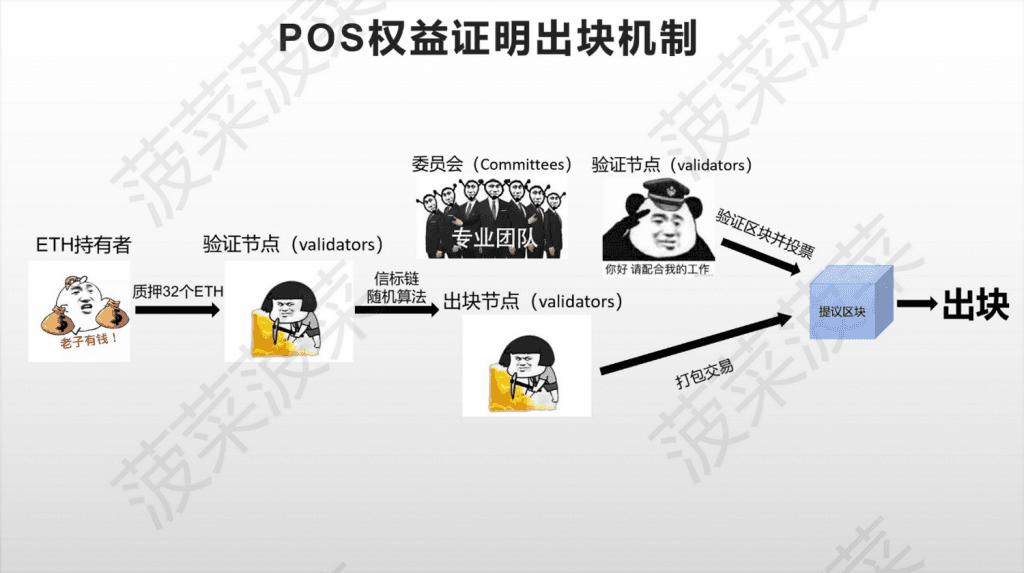

How does Ethereum's POS consensus mechanism work?[7]#

The consensus mechanism is a system that allows all nodes maintaining the network in a blockchain to reach an agreement, and its importance is self-evident. Ethereum completed the "The Merge" on September 15, 2022, during the Ethereum 2.0 upgrade phase, merging the POW proof-of-work Ethereum mainnet with the POS proof-of-stake beacon chain, officially replacing the POW mechanism with the POS mechanism as Ethereum's consensus mechanism.

In the POW mechanism, miners compete for block rights through the accumulation of computational power. In the POS mechanism, miners compete for block rights by staking 32 ETH to become Ethereum's validating nodes (the staking method will not be elaborated here).

In addition to the change in the consensus mechanism, Ethereum's block time has also changed from a variable block time to a fixed time, divided into two units: slots and epochs. A slot is 12 seconds, and an epoch is 6.4 minutes. An epoch contains 32 slots, meaning a block is produced every 12 seconds, and 32 blocks are produced in one epoch (6.4 minutes).

When miners stake 32 ETH to become validating nodes, the beacon chain randomly selects validating nodes as block producers to package blocks. Each block will randomly select a block producer. Additionally, in each epoch, the beacon chain will average and randomly assign all validating nodes to form a committee of at least 128 validating nodes for each block.

This means that each block will be assigned a number of validating nodes equal to 1/32 of the total number of nodes, and these validating nodes in the committee need to vote to verify the block packaged by the block producer. Once the block producer packages the block, if more than two-thirds of the validating nodes vote in favor, the block is successfully produced.

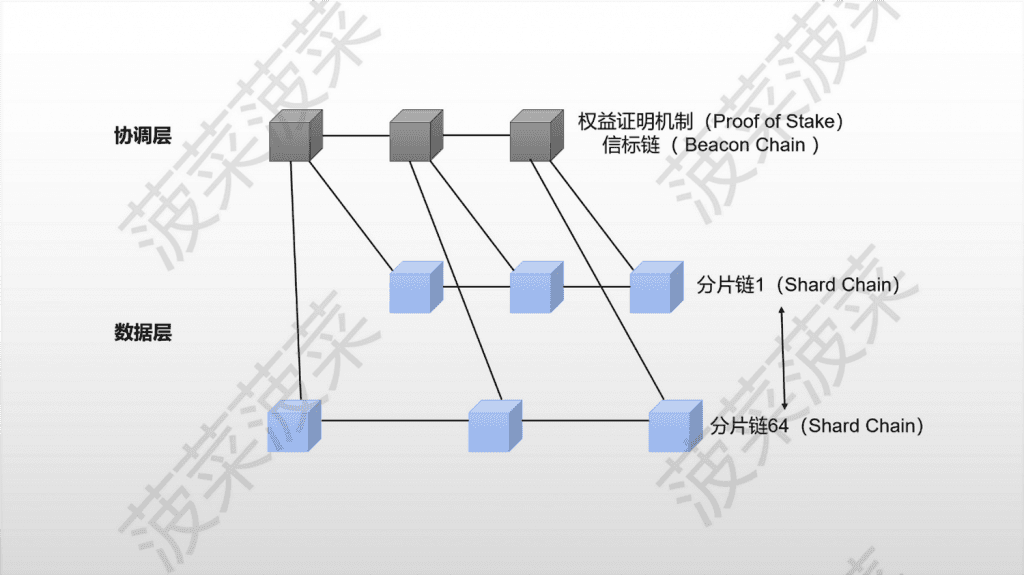

What is the initial sharding scheme Sharding 1.0? [7]#

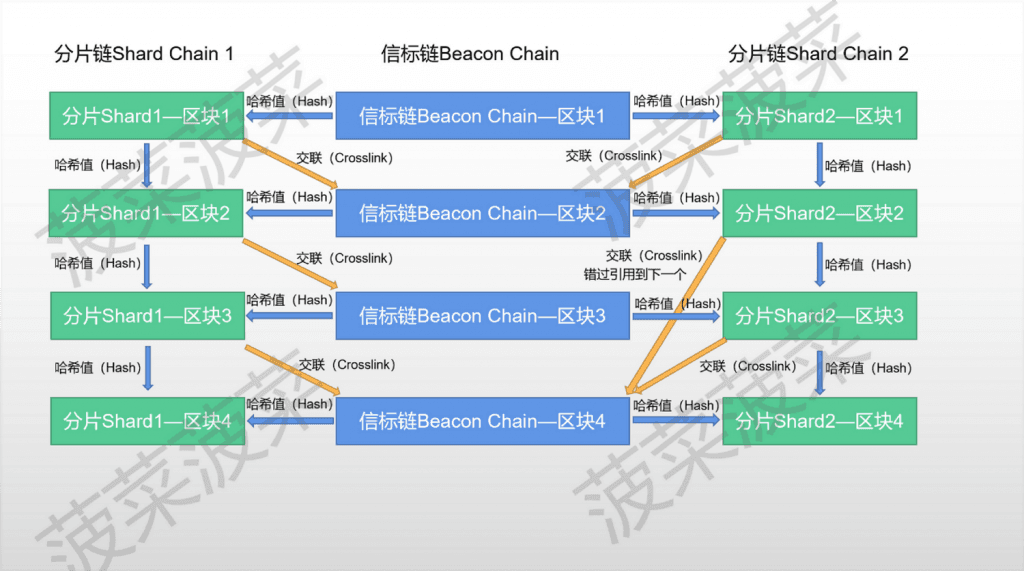

In the design concept of the initial sharding scheme Sharding 1.0, Ethereum was designed to have a maximum of 64 shard chains instead of just one main chain, achieving scalability by adding multiple new chains. In this scheme, each shard chain is responsible for processing Ethereum's data and handing it over to the beacon chain, which coordinates the entire Ethereum network. The block producers and committees for each shard chain are randomly assigned by the beacon chain.

The beacon chain and shard chains are linked through crosslinks. The blocks of the beacon chain will give a hash value to the shard blocks of the same block, and then this shard block will carry this hash value to the next beacon block to achieve crosslinking. If it is missed, it can be given to the next beacon block.

What are the drawbacks of the initial sharding scheme Sharding 1.0?#

In simple terms, the Sharding 1.0 scheme involves splitting Ethereum into many shard chains to process data together and then handing the data over to the beacon chain to achieve scalability. However, this scheme has many drawbacks:

Development difficulties: Splitting Ethereum into 64 shard chains while ensuring they can operate normally is technically very challenging, and the more complex a system is, the more likely it is to have unforeseen vulnerabilities. If problems arise, fixing them can be very troublesome.

Data synchronization issues: The beacon chain will randomly shuffle the "committees" responsible for validation in each epoch, meaning that each time validating nodes are reassigned, it is a large-scale data synchronization for the network. If a node is assigned to a new shard chain, it needs to synchronize the data of that shard chain. Due to the varying performance and bandwidth of nodes, it is difficult to guarantee synchronization within the specified time. However, if nodes are required to synchronize all shard chain data, it will greatly increase the burden on nodes, leading to increased centralization of Ethereum.[2]

Data volume growth issues: Although Ethereum's processing speed has improved significantly, multiple shard chains processing data simultaneously also leads to a massive increase in the amount of stored data. The rate of data expansion for Ethereum will be many times that of before, continuously increasing the storage performance requirements for nodes, thus leading to more centralization.

Inability to solve the MEV problem: Maximum Extractable Value (MEV) refers to the maximum value that can be extracted from block production beyond the standard block rewards and gas fees by adding and excluding transactions in a block and changing the order of transactions within the block. In Ethereum, once a transaction is initiated, it is placed in the mempool (a pool that holds pending transactions) waiting to be packaged by miners. Miners can see all transactions in the mempool, and they have significant power; they control the inclusion, exclusion, and order of transactions. If someone bribes miners by paying higher Gas fees to adjust the order of transactions in the pool for profit, this is considered a form of MEV.[6]

For example:

- One MEV technique is called "sandwich attack," where this MEV extraction method monitors large DEX transactions on-chain. For instance, if someone wants to purchase $1 million worth of a token on Uniswap, this transaction will significantly increase the token's price. When this transaction enters the mempool, a monitoring bot can detect it. The bot can then bribe the miner packaging this block to insert a buy operation for this token ahead of the person, followed by a sell operation after the person's purchase, effectively "sandwiching" the large DEX trader. This way, the person initiating the "sandwich attack" profits from the token due to the price increase caused by the large transaction, while the large trader incurs a loss.[6]

The existence of MEV has continuously brought negative impacts to Ethereum, such as losses and poorer user experiences caused by "sandwich attacks," network congestion due to frontrunning competition, high Gas fees, and even node centralization issues. Nodes that extract more MEV can continuously occupy more shares in the network through their earnings, as more earnings = more ETH = more staking rights. Additionally, the high costs brought by MEV (network congestion and high GAS caused by frontrunning) will lead to continuous user loss for Ethereum. If the value of MEV significantly exceeds block rewards, it could even destabilize the consensus and security of the entire Ethereum network, and the Sharding 1.0 scheme cannot solve the series of problems brought by MEV.

With the proposal of Ethereum's new sharding scheme Danksharding by researcher and developer Dankrad Feist at the end of 2021, Danksharding has been unanimously recognized by the Ethereum community as the best solution for achieving sharding scalability, and it may even bring a new revolution to Ethereum.

Danksharding uses a new sharding approach to solve Ethereum's scalability problem, focusing on a sharding scheme that expands around Layer 2 Rollup. This new sharding scheme can solve scalability issues while ensuring decentralization and security without significantly increasing the burden on nodes, and it also addresses the negative impacts brought by MEV.

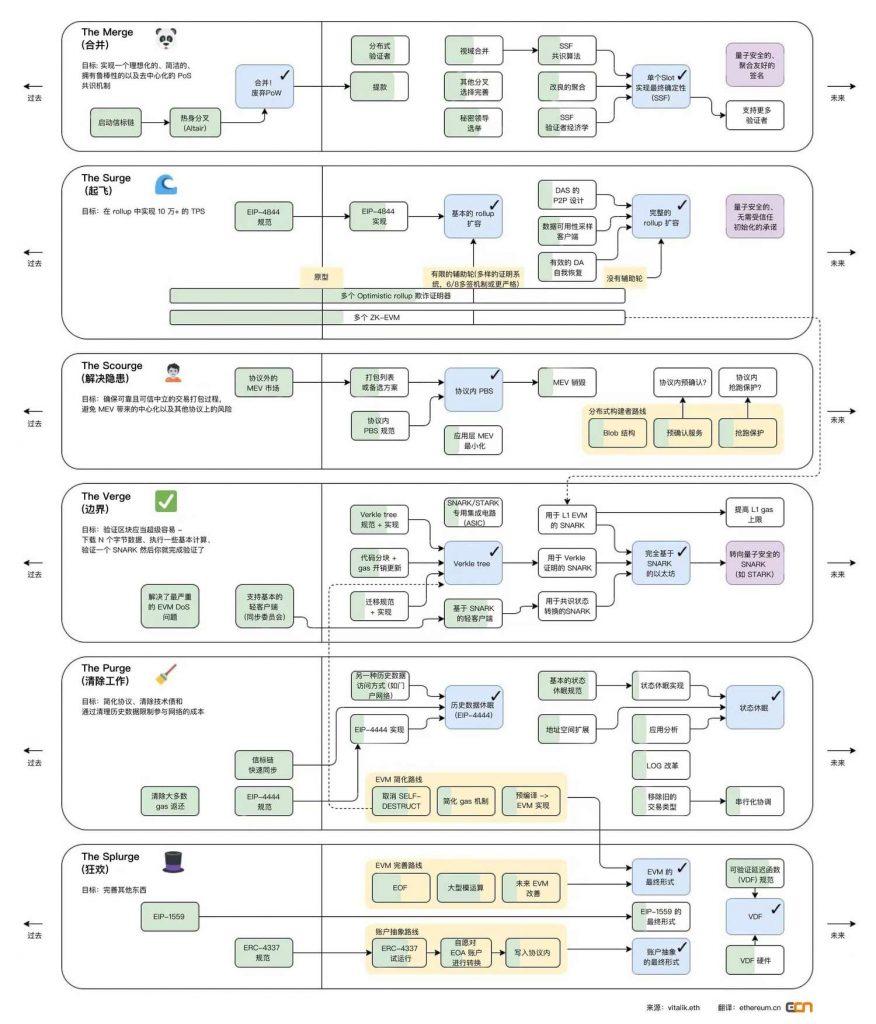

We can see from the following diagram that the upcoming Ethereum upgrade phases "The Surge" and "The Scourge" aim to achieve over 100,000 TPS in Rollup and avoid centralization and other protocol risks brought by MEV.

Image / Source: vitalik.eth Translation: ethereum.cn

So how does Danksharding solve Ethereum's scalability problem? Let's start with the precursor scheme EIP-4844: Proto-Danksharding—new transaction type Blob.

Precursor scheme EIP-4844: Proto-Danksharding—New transaction type Blob#

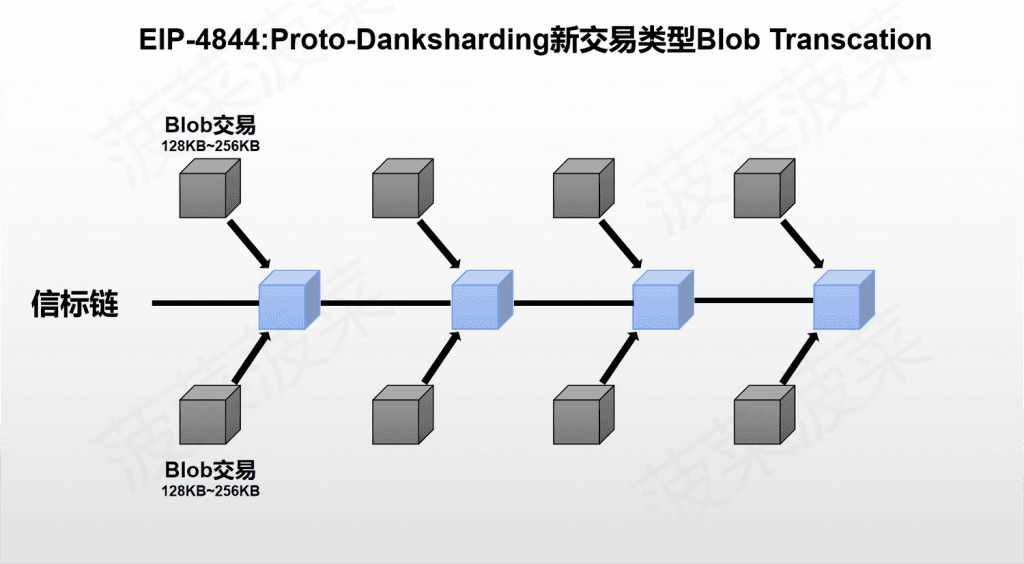

EIP-4844 introduces a new transaction type to Ethereum—Blob Transaction. This new transaction type Blob provides Ethereum with an additional external database:

-

The size of a Blob is approximately 128KB.

-

A transaction can carry a maximum of two Blobs—256KB.

-

The target Blob for each block is 8—1MB, with a maximum capacity of 16 Blobs—2MB (the concept of Target has been mentioned in the scalability context).

-

The data of the Blob is temporarily stored and will be cleared after a period (currently, the community suggests 30 days).

Currently, the average size of each block on Ethereum is only about 85KB. The additional storage space provided by Blob is enormous. To put it in perspective, the total data size of all Ethereum ledgers since its inception is only about 1TB, while Blob can provide Ethereum with an additional 2.5TB to 5TB of data per year, several times the total data size of the entire Ethereum ledger.

The Blob transactions introduced by EIP-4844 can be said to be tailor-made for Rollup. The data of Rollup is uploaded to Ethereum in the form of Blob, and the additional data space allows Rollup to achieve higher TPS and lower costs, while also freeing up the block space originally occupied by Rollup for more users.

Since the data of the Blob is temporarily stored, the explosive increase in data volume will not impose an increasingly heavy burden on the storage performance of nodes. If only temporarily storing a month's worth of Blob data, from the perspective of synchronization data volume, each block node needs to download an additional 1MB to 2MB of data. This does not seem to be a burden in terms of bandwidth requirements for nodes. Therefore, in terms of data storage, nodes only need to download and save an additional fixed amount of around 200GB to 400GB (the data volume for one month). This means that while ensuring decentralization and security, the trade-off of slightly increasing the burden on nodes results in a TPS increase and cost reduction by tens or even hundreds of times, making it an excellent solution for addressing Ethereum's scalability issues.

What if users want to access historical data after the data has been cleared?

First, the purpose of Ethereum's consensus protocol is not to guarantee the eternal storage of all historical data. On the contrary, its purpose is to provide a highly secure real-time bulletin board and leave space for long-term storage for other decentralized protocols. The existence of the bulletin board ensures that the data published on it remains for a sufficiently long time, allowing any user or protocol that wants this data enough time to fetch and save it. Therefore, the responsibility for saving this Blob data is entrusted to other roles, such as Layer 2 project parties, decentralized storage protocols, etc.[3]

Danksharding—Complete scalability solution#

EIP-4844 represents the first step in Ethereum's scalability expansion around Rollup, but for Ethereum, the scalability effect achieved by EIP-4844 is far from sufficient. The complete Danksharding scheme expands the data capacity that Blobs can carry from 1MB to 2MB per block to 16MB to 32MB and introduces a new mechanism called Proposer/Builder Separation (PBS) to address the issues brought by MEV.

To continue expanding on the basis of EIP-4844, we need to know what challenges exist:

Excessive node burden: We know that the 1MB to 2MB size of Blob in EIP-4844 adds an acceptable burden on nodes, but if the data volume of Blob is increased 16 times to 16MB to 32MB, the burden on nodes in terms of data synchronization and storage will become excessive, leading to a decrease in Ethereum's decentralization.

Data availability issues: If nodes do not download all Blob data, they will face data availability issues because the data is not openly accessible on-chain. For example, if an Ethereum node doubts a transaction on Optimism Rollup and wants to challenge it, but Optimism Rollup does not provide this data, then without the original data, it cannot prove that the transaction is problematic. Therefore, to solve the data availability issue, it is essential to ensure that the data is always open and accessible.

So how does Danksharding solve these problems?

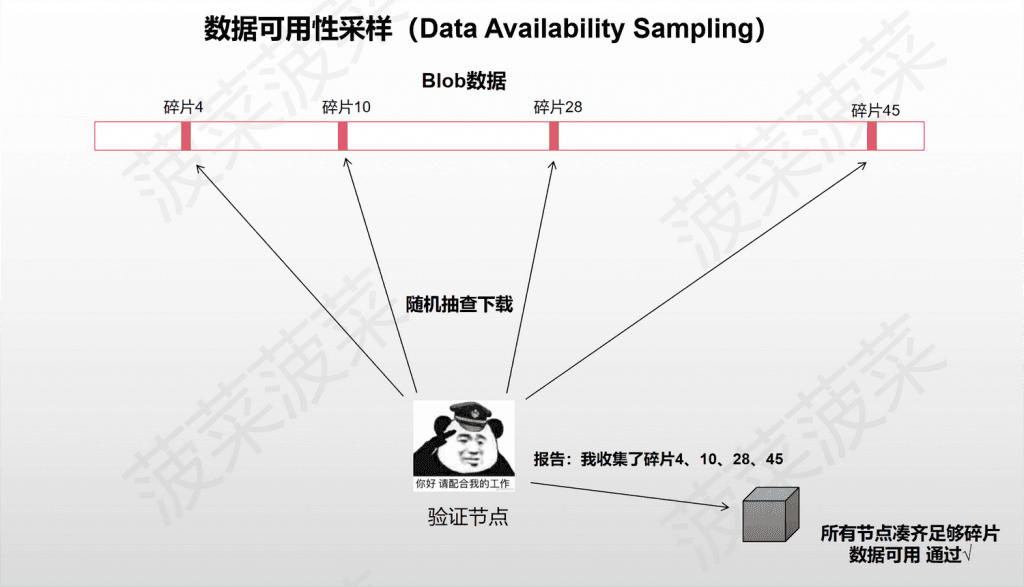

Data Availability Sampling (DAS)#

Danksharding proposes a solution—Data Availability Sampling (DAS)—to reduce the burden on nodes while ensuring data availability.

The idea of Data Availability Sampling is to split the data in the Blob into fragments and allow nodes to shift from downloading Blob data to randomly sampling Blob data fragments. This way, the data fragments of the Blob are distributed across every node in Ethereum, while the complete Blob data is stored in the entire Ethereum ledger, provided that there are enough nodes and they are decentralized.

For example: If the data of the Blob is split into 10 fragments, and there are 100 nodes in the network, each node will randomly sample and download one data fragment and submit the sampled fragment numbers to the block. As long as a block can gather all the numbered fragments, Ethereum will assume that the Blob data is available. The original data can be restored by piecing together the fragments. However, there is a very low probability that all 100 nodes will not sample a certain numbered fragment, leading to data loss, which slightly reduces security but is acceptable in probability.

To implement Data Availability Sampling (DAS), Danksharding employs two technologies: Erasure Coding and KZG Commitment.

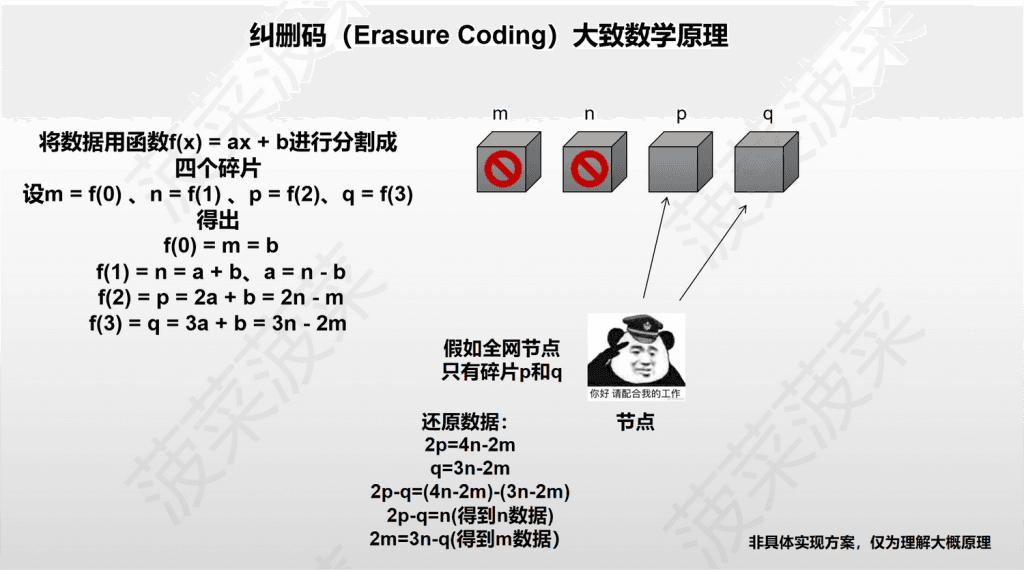

Erasure Coding#

Erasure Coding is a fault-tolerant coding technique that allows all Ethereum nodes to restore the original data with just over 50% of the data fragments. This significantly reduces the probability of data loss. The specific implementation principle is quite complex, but here is a simplified explanation using a mathematical formula:[2]

-

First, construct a function f(x) = ax + b and randomly select 4 x values.

-

Let m = f(0) = b, n = f(1) = a + b, so we can derive a = n – b, b = m.

-

Let p = f(2), q = f(3), then we can derive p = 2a + b = 2n – m, q = 3a + b = 3n – 2m.

-

Then m, n, p, and q are distributed among the nodes in the network.

-

According to the mathematical formula, we only need to find two of the fragments to calculate the other two.

-

If we find n and m, we can directly calculate q=3n-2m and p=2n-m.

-

If we find q and p, we can derive 2p=4n-2m and q=3n-2m to find n.

In simple terms, Erasure Coding uses mathematical principles to split Blob data into many fragments, allowing Ethereum nodes to restore the original data with just over 50% of the fragments, significantly reducing the probability of insufficient fragment collection, which can be considered negligible.

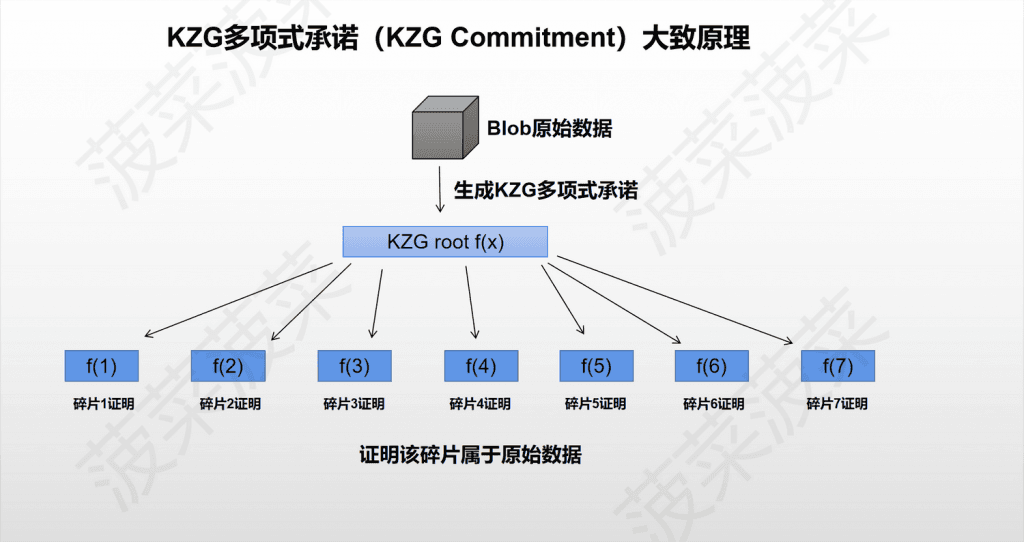

KZG Commitment#

KZG Commitment is a cryptographic technique used to address the integrity issues of data from Erasure Coding. Since nodes only sample the data fragments that have been cut by Erasure Coding, nodes do not know whether this data fragment genuinely comes from the original Blob data. Therefore, the role responsible for encoding also needs to generate a KZG Commitment to prove that this Erasure Coding data fragment is indeed part of the original data. The role of KZG is somewhat similar to a Merkle tree but has a different structure, with all proofs in KZG being on the same polynomial.

Danksharding achieves Data Availability Sampling (DAS) through Erasure Coding and KZG Commitment, significantly reducing the burden on nodes while allowing the data volume of Blobs to expand to 16MB to 32MB. Currently, the Ethereum community has also proposed a scheme called 2D KZG scheme to further cut data fragments and reduce bandwidth and computational requirements, but the specific algorithm to be used is still under active discussion within the community, and the design of DAS is also continuously being optimized and improved.

For Ethereum, Data Availability Sampling (DAS) solves the problem of achieving Blob data volume expansion to 16MB to 32MB while reducing the burden on nodes. However, there seems to be another issue: Who will encode the original data?

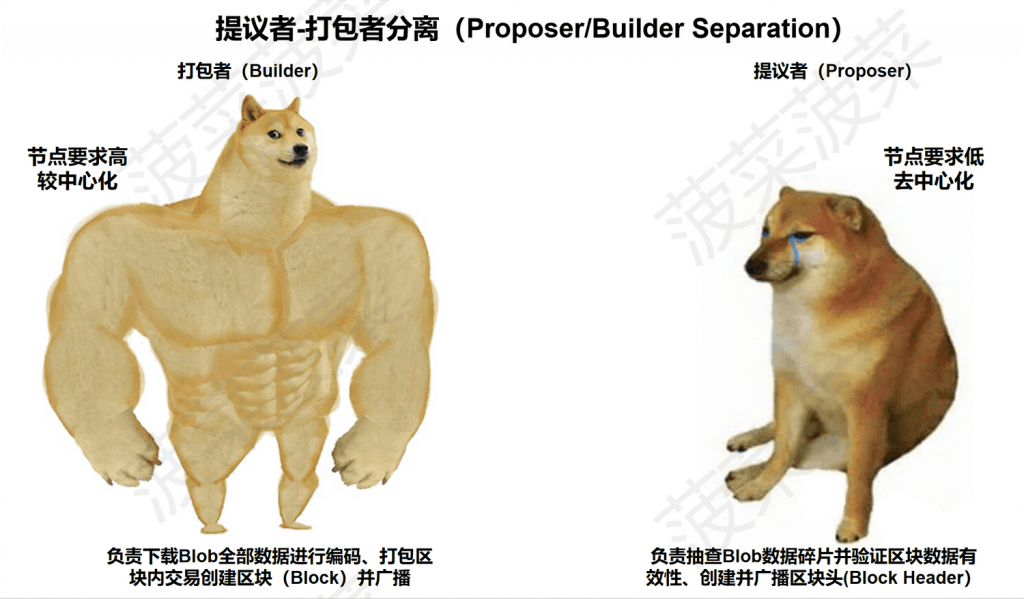

If the original Blob data needs to be encoded, the nodes performing the encoding must have the complete original data. Achieving this would impose higher requirements on nodes. Therefore, as Spinach mentioned earlier, Danksharding proposes a new mechanism called Proposer/Builder Separation (PBS) to address the issues brought by MEV, which also solves the encoding problem.

Proposer/Builder Separation (PBS)#

First, we know that Data Availability Sampling (DAS) reduces the burden on nodes to validate Blob data, achieving low-configuration and decentralized validation. However, creating this block requires having the complete Blob data and performing encoding, which raises the requirements for Ethereum full nodes. Proposer/Builder Separation (PBS) proposes to divide nodes into two roles: Builders and Proposers. High-performance nodes can become Builders, while lower-performance nodes become Proposers.

Currently, Ethereum nodes are divided into two types: full nodes and light nodes. Full nodes need to synchronize all data on Ethereum, such as transaction lists and block bodies, and they perform both block packaging and validation roles. Since full nodes can see all information within a block, they can reorder or add/remove transactions to extract MEV value. Light nodes do not need to synchronize all data; they only need to synchronize block headers to validate block production.[1]

After implementing Proposer/Builder Separation (PBS):

-

High-performance nodes can become Builders, responsible for downloading Blob data, encoding it, and creating blocks (Block), then broadcasting it to other nodes for sampling. For Builders, the amount of synchronized data and bandwidth requirements are relatively high, leading to some centralization.

-

Lower-performance nodes can become Proposers, responsible for validating the data's validity and creating and broadcasting block headers (Block Header). For Proposers, the amount of synchronized data and bandwidth requirements are lower, leading to decentralization.

PBS achieves a division of labor among nodes by separating the roles of packaging and validation, allowing high-performance nodes to be responsible for downloading all data for encoding and distribution, while low-performance nodes handle sampling validation. So how is the MEV problem addressed?

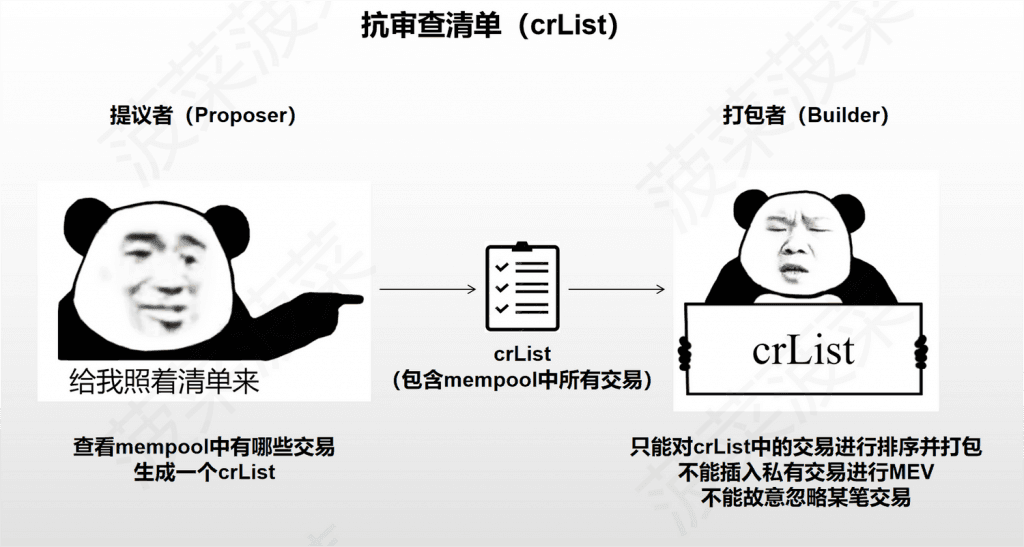

Anti-censorship list (crList)#

Since PBS separates the packaging and validation tasks, Builders have greater power to censor transactions. Builders can intentionally ignore certain transactions and arbitrarily reorder and insert their own transactions to extract MEV. However, the anti-censorship list (crList) addresses these issues.

The mechanism of the anti-censorship list (crList):[1]

-

Before Builders package block transactions, Proposers will first publish an anti-censorship list (crList) that includes all transactions in the mempool.

-

Builders can only choose to package and sort transactions from the crList, meaning they cannot insert their private transactions to extract MEV or intentionally reject a transaction (unless the Gas limit is reached).

-

After Builders package the block, they broadcast the final version of the transaction list's hash to Proposers, who select one transaction list to generate the block header (Block Header) and broadcast it.

-

When nodes synchronize data, they will obtain the block header from Proposers and the block body from Builders, ensuring that the block body is the final selected version.

The anti-censorship list (crList) effectively mitigates the negative impacts of MEV, such as "sandwich attacks," as nodes can no longer extract similar MEV by inserting private transactions.

The specific implementation of PBS in Ethereum is still under discussion, and the currently possible preliminary implementation scheme is the Two-slot PBS.

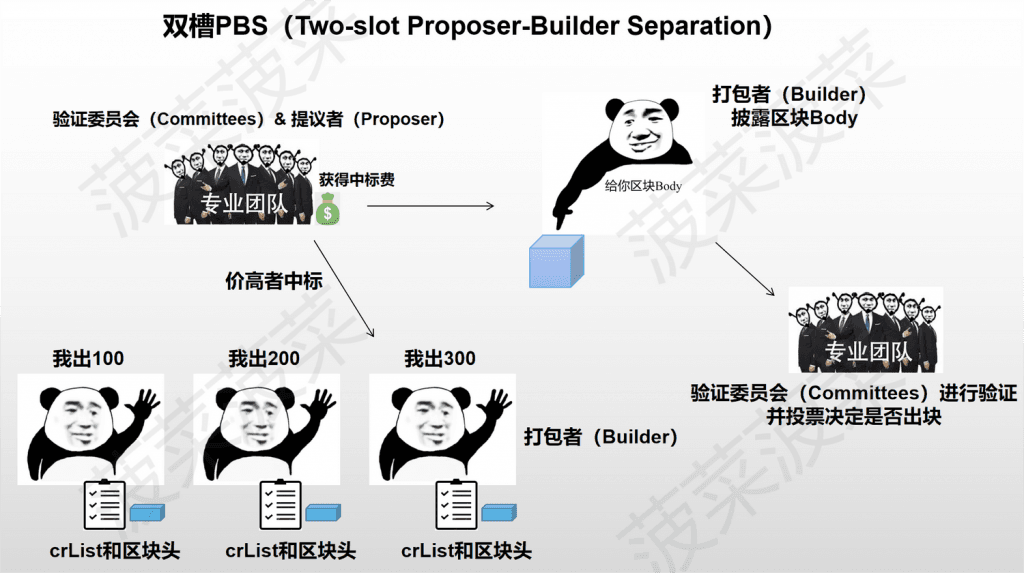

Two-slot PBS#

Two-slot PBS adopts a bidding model to determine block production:[2]

-

Builders obtain the crList and create a block header for the transaction list, then place a bid.

-

Proposers select the final winning block header and Builder, and Proposers unconditionally receive the winning fee (regardless of whether a valid block is generated).

-

The validation committee confirms the winning block header.

-

Builders disclose the winning block body.

-

The validation committee confirms the winning block body and conducts a validation vote (if the Builder intentionally does not provide the block body, it is considered that the block does not exist).

Although Builders can still extract MEV by adjusting transaction order, the bidding mechanism of Two-slot PBS causes these Builders to begin "involution." In a situation where everyone must bid to compete for block production, the profits extracted by centralized Builders through MEV will be continuously squeezed, and the final profits will be distributed to decentralized Proposers, solving the problem of increasing centralization of Builders through MEV extraction.

However, Two-slot PBS has a design flaw: the name of this design includes "Two-slot," which means that in this scheme, the time for a valid block production is extended to 24 seconds (one slot = 12 seconds). The Ethereum community has been actively discussing how to address this issue.

Summary#

Danksharding provides a transformative solution for Ethereum to address the "Blockchain Trilemma," achieving scalability while ensuring Ethereum's decentralization and security:

-

Through the precursor scheme EIP-4844: Proto-Danksharding, a new transaction type Blob is introduced, and the additional data volume of 1MB to 2MB carried by Blob can help Ethereum achieve higher TPS and lower costs in Rollup.

-

Through Erasure Coding and KZG Commitment, Data Availability Sampling (DAS) is implemented, allowing nodes to validate data availability by sampling only a portion of data fragments and reducing the burden on nodes.

-

By implementing Data Availability Sampling (DAS), the additional data volume of Blob is expanded to 16MB to 32MB, further enhancing the scalability effect.

-

By achieving Proposer/Builder Separation (PBS), the work of validating and packaging blocks is divided into two node roles, achieving decentralization for packaging nodes and validation nodes.

-

Through the anti-censorship list (crList) and Two-slot PBS, the negative impacts brought by MEV are significantly reduced, preventing Builders from inserting private transactions or censoring specific transactions.

If all goes well, the precursor scheme EIP-4844 is expected to be officially implemented in the Cancun upgrade after the Shanghai upgrade of Ethereum. The most direct benefit of the implementation of EIP-4844 will be for Rollup in Layer 2 and the ecosystem on Rollup. Higher TPS and lower costs are very suitable for high-frequency applications on-chain, and we can imagine that some "killer applications" may emerge. The centralized block production, decentralized validation, and anti-censorship achieved by Danksharding will bring a new narrative to Ethereum's public chain. What kind of chemical reactions will modular blockchains and Ethereum after Danksharding produce?

Spinach believes that the implementation of Danksharding will rewrite the entire game rules, leading Ethereum into a new era in the blockchain industry!

References#

[1] Data Availability, Blockchain's Storage Expansion

[2] Understanding Ethereum's new upgrade scheme Danksharding in one article

[3] Proto-Danksharding FAQ – HackMD

[4] What is V God’s popular science on "Danksharding"?

[6] Buidler DAO article: How to rescue NFTs from hackers after a wallet is stolen?

[7] History Repeats? A Detailed Explanation of Ethereum 2.0 and Hard Forks